Updated: March 1st, 2020

I spent quite some time digging around the web looking for some straightforward way of creating a CI/CD pipeline using AWS services running containerized applications. Ideally, I wanted to use the AWS CLI tool as much as possible, using the cli is much easier to manipulate and change on the fly ( IMHO ).

There are several good articles and examples out there, some using CloudFormation ( which I’ll never do … ugly ), and others with partial examples, but nothing I found was complete.

So here’s what I’ve come up with: A bash shell script that can be run from the command line, it creates a complete CI/CD pipeline from end-to-end with a containerized application using Docker, utilizing these AWS services:

- IAM ( for creating accounts/roles for various services )

- CodeCommit

- CodeBuild

- CodeDeploy

- CodePipeline

- ELB ( load balancer )

- ECS ( Elastic Container Service and Fargate )

- ECR ( Elastic Container Repository )

- S3 ( repo artifacts go here )

- CloudWatch ( for code update trigger )

The complete set of commands are listed here:

listccrepo=$( aws codecommit get-repository –repository-name $PROJECT –region $REGION )

load_balancer=$( aws elbv2 create-load-balancer –name $PROJECT-lb –subnets $SUBNETS –security-groups $SECURITY_GROUPS –region $REGION)

target_group_test=$( aws elbv2 create-target-group –name $PROJECT-target-test –protocol HTTP –port 8080 –target-type ip –vpc-id $VPC –region $REGION)

target_group_live=$( aws elbv2 create-target-group –name $PROJECT-target-live –protocol HTTP –port 443 –target-type ip –vpc-id $VPC –region $REGION)

listener80=$( aws elbv2 create-listener –load-balancer-arn “$lbarn” –protocol HTTP –port 80 –default-actions “Type=redirect,RedirectConfig={Protocol=HTTPS,Port=443,Host=’#{host}’,Query=’#{query}’,Path=’/#{path}’,StatusCode=HTTP_301}” –region $REGION)

listner443=$( aws elbv2 create-listener –load-balancer-arn “$lbarn” –protocol HTTPS –port 443 –certificates CertificateArn=”$CERTIFICATE_ARN” –ssl-policy “ELBSecurityPolicy-2016-08” –default-actions “Type=forward,TargetGroupArn=$tgarn_live” –region $REGION)

task=$( aws ecs register-task-definition –cli-input-json file://taskdef.json –region $REGION)

service=$( aws ecs create-service –cli-input-json file://$PROJECT-service_def.json –region $REGION)

create_codebuild_role=$( aws iam create-role –role-name $PROJECT-codebuild-role –assume-role-policy-document file://$PROJECT-codebuild-service-role.json )

update_codebuild_role=$( aws iam put-role-policy –role-name $PROJECT-codebuild-role –policy-name $PROJECT-codebuild-policy –policy-document file://$PROJECT-update_code_build_policy.json )

code_build_project=$(aws codebuild create-project –name “$PROJECT” –source “{\”type\”: \”CODECOMMIT\”,\”location\”:\”$repohttp\”}” –artifacts {“\”type\”: \”NO_ARTIFACTS\””} –environment “{\”privilegedMode\”: true,\”type\”: \”LINUX_CONTAINER\”,\”image\”: \”aws/codebuild/amazonlinux2-x86_64-standard:2.0\”,\”computeType\”: \”BUILD_GENERAL1_SMALL\”,\”imagePullCredentialsType\”: \”CODEBUILD\”}” –service-role “arn:aws:iam::$ACCOUNT_ID:role/$PROJECT-codebuild-role”)

createcodepipepolicy=$( aws iam create-role –role-name $PROJECT-code-pipe-policy-role –assume-role-policy-document file://$PROJECT-code-pipe-policy-role.json )

updatecodepipepolicy=$( aws iam put-role-policy –role-name $PROJECT-code-pipe-policy-role –policy-name $PROJECT-code-pipe-policy –policy-document file://$PROJECT-code-pipe-policy-update.json )

create_code_pipeline=$( aws codepipeline create-pipeline –cli-input-json file://$PROJECT-codepipe.json –region $REGION )

createecrrepo=$( aws ecr create-repository –repository-name $PROJECT –region $REGION 2>/dev/null )

listecrrepo=$( aws ecr describe-repositories –repository-name $PROJECT –region $REGION )

cloudwatch_role=$( aws iam create-role –role-name $PROJECT-cloudwatch-role –assume-role-policy-document file://$PROJECT-cloudwatch_role_trust_policy.json )

update_cloudwatch_policy=$( aws iam put-role-policy –role-name $PROJECT-cloudwatch-role –policy-name $PROJECT-cloudwatch-policy –policy-document file://$PROJECT-update_cloudwatch_role_policy.json )

cloudwatch_rule=$( aws events put-rule –name “codepipeline-$PROJECT-$BRANCH-rule” –event-pattern “$(echo $cloudwatch_rule)” )

cloudwatch_target=$( aws events put-targets –rule “codepipeline-$PROJECT-$BRANCH-rule” –targets “Id”=”codepipeline-$PROJECT-pipeline”,”Arn”=”arn:aws:codepipeline:$REGION:$ACCOUNT_ID:$PROJECT-pipeline”,”RoleArn”=”arn:aws:iam::$ACCOUNT_ID:role/$PROJECT-cloudwatch-role” )

Some explanation is needed for the above commands, here’s the basics:

- Most of the above commands return json output which we’ll need to parse and collect specific attributes, mostly ID’s, to continue to the next set of commands. To parse the output, we’ll need to use the “jq” utility command. For example, from the first cli command:

$> createccrepo=$( aws codecommit create-repository –repository-name $PROJECT –region $REGION 2>/dev/null )

We need to extract the ssh git repo location in order to initialize and create the local git repo as our code starting point:

$> repossh=$(echo $createccrepo | jq -r ‘.repositoryMetadata.cloneUrlSsh’ 2>/dev/null )

And later in the process:

$> git clone $repossh - You’ll also notice throughout the code I use variables. Several variables will be required to run the script ( ie $PROJECT and $REGION ), those will be identified later.

- Additional structure will be required to run the commands as well, along with several embedded templates to support the aws cli commands … a complete script will be provided at the end of this page which will include everything that is needed to run the process.

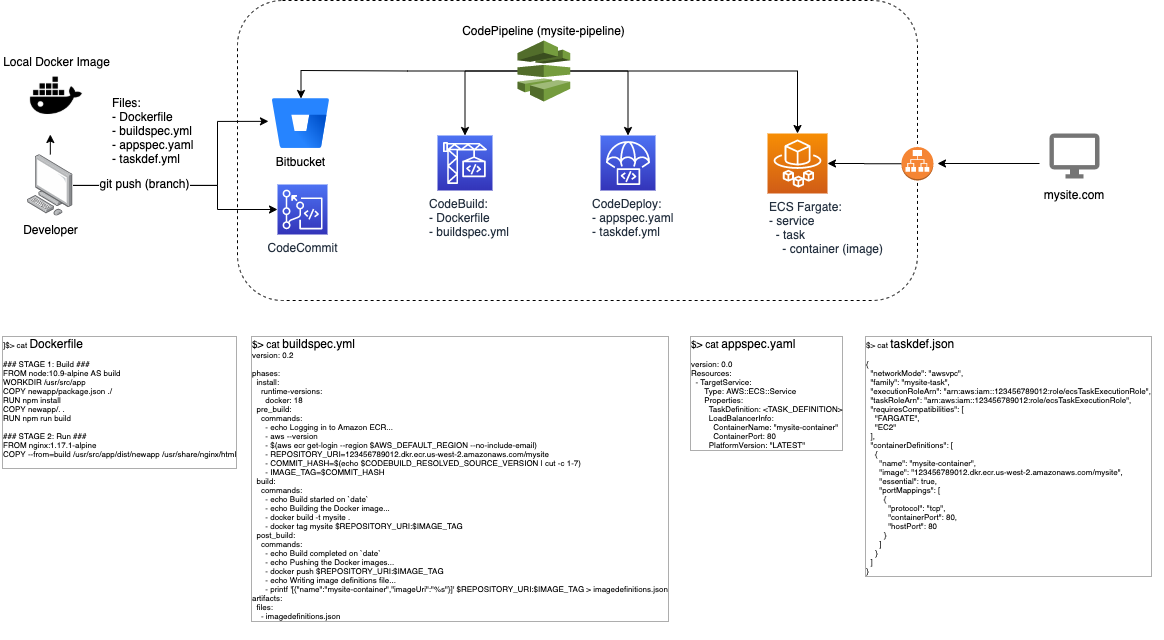

Before we get into the gruesome details, let’s take a look at what the pipeline looks like from a diagram:

As you can see above, the CI/CD pipeline isn’t all that complicated ( sort-of ). There’s only a few main components that make up the entire process along with a few small configuration files. Here’s the basic concept and general steps to create and launch a web site project:

- The developer or engineer who creates the product ( in this case we’ll assume a web site of some flavor – Django, Angular, Node.js “Express”, Rails, etc. ) will containerize the product using any of the methods necessary to complete the task ( ie. docker, docker-compose, maven, etc. ).

- Once the product is proven to be built in a repeating and consistent fashion, the CI/CD pipeline can be built.

- The CI/CD build script can be launched with the “project name” as the first input argument.

- When the pipeline completes, the git repo can be initialized and merged into the developers codebase.

- When the developer is ready to push changes to the development or staging environment, a simple “git push” is all that is needed to trigger the pipeline.

- Once the pipeline see’s any change to the code base in the specific branch, the code is sent up the pipeline, built and deployed into the ECS cluster ( Fargate ).

- When completed, the websites URL will automatically update and display the changes.

More on that later. So lets tune our script to be a bit more complete, filling in some of the details to make all of this work.

We need to establish our environment along with a few static variables which will define our AWS account, VPC, and a few network specific settings. So we’ll add this set of variables at the beginning of our script:

PROJECT=$1

if [[ -z $PROJECT ]]; then

echo “Usage: $0 <YOUR_PROJECT_NAME>”

exit

fi

# subdir for git repo files

DIR=’./cicd_deployments/’$PROJECT

# AWS Account ID

ACCOUNT_ID='<AWS_ACCOUNT_ID>’

# Main Account VPC ie “vpc-abc123”

VPC=’vpc-<DEFAULT_VPC>’

echo “VPC : $VPC”

# Main Account Subnets ie “subnet-abc123 subnet-xyz890”, a list separated by space, minimum 2

SUBNETS='<LIST_OF_SUBNETS>’

echo “Subnets : $SUBNETS”

# Main Account Security Groups ( port 80 and 443 ) ie “sg-abc123 sg-xyz890”, a list separated by space

SECURITY_GROUPS='<SECURITY_GROUPS>’

echo “Security Groups : $SECURITY_GROUPS”

# Region ie us-west-1

REGION='<REGION>’

echo “Region : $REGION”

# SSL Certificate ( mysite.com )

CERTIFICATE_ARN=’arn:aws:acm:$REGION:’$ACCOUNT_ID’:certificate/<CERT_ID>’

echo “Cert : $CERTIFICATE_ARN”

# Cluster Arn

CLUSTER_NAME='<YOUR_FARGATE_CLUSTER>’

CLUSTER_ARN=’arn:aws:ecs:$REGION:’$ACCOUNT_ID’:cluster/’$CLUSTER_NAME

echo “Cluster ARN : $CLUSTER_ARN”

# Image ARN

IMAGE_ARN=$ACCOUNT_ID’.dkr.ecr.’$REGION’.amazonaws.com/’$PROJECT

echo “Image ARN : $IMAGE_ARN”

# Artifact location (S3 bucket)

ARTIFACT_LOCATION='<S3_BUCKET>’

echo “Artifact Location: s3://$ARTIFACT_LOCATION”

# Code branch

BRANCH='<GIT_BRANCH>’

echo “Code Branch : $BRANCH”

echo

Most of the above is self explanatory, just edit the variables with your specific environment ( IN_RED ) .

For the rest of the script, we’ll need add some templates and supporting files to submit to the various cli commands. I wont go into any real detail with these as it falls outside the scope of this article. So Instead, we’ll just get right to the punchline: Let’s show what the entire script looks like.

PROJECT=$1

DELETE=$2

function realpause() {

read -p “$1, press any key to continue, cntl+c to exit …” -n1 -s

echo

}

function pause() {

echo $1

sleep 1

}

if [[ -z $PROJECT ]]; then

echo “Usage: $0 <YOUR_PROJECT_NAME>”

exit

fi

# subdir for git repo files

DIR=’./cicd_deployments/’$PROJECT

# AWS Account ID

ACCOUNT_ID='<AWS_ACCOUNT_ID>’

# Main Account VPC ie “vpc-abc123”

VPC=’vpc-<DEFAULT_VPC>’

echo “VPC : $VPC”

# Main Account Subnets ie “subnet-abc123 subnet-xyz890”, a list separated by space, minimum 2

SUBNETS='<LIST_OF_SUBNETS>’

echo “Subnets : $SUBNETS”

# Main Account Security Groups ( port 80 and 443 ) ie “sg-abc123 sg-xyz890”, a list separated by space

SECURITY_GROUPS='<SECURITY_GROUPS>’

echo “Security Groups : $SECURITY_GROUPS”

# Region ie us-west-1

REGION='<REGION>’

echo “Region : $REGION”

# SSL Certificate ( mysite.com )

CERTIFICATE_ARN=’arn:aws:acm:$REGION:’$ACCOUNT_ID’:certificate/<CERT_ID>’

echo “Cert : $CERTIFICATE_ARN”

# Cluster Arn

CLUSTER_NAME='<YOUR_FARGATE_CLUSTER>’

CLUSTER_ARN=’arn:aws:ecs:$REGION:’$ACCOUNT_ID’:cluster/’$CLUSTER_NAME

echo “Cluster ARN : $CLUSTER_ARN”

# Image ARN

IMAGE_ARN=$ACCOUNT_ID’.dkr.ecr.’$REGION’.amazonaws.com/’$PROJECT

echo “Image ARN : $IMAGE_ARN”

# Artifact location (S3 bucket)

ARTIFACT_LOCATION='<S3_BUCKET>’

echo “Artifact Location: s3://$ARTIFACT_LOCATION”

# Code branch

BRANCH='<GIT_BRANCH>’

echo “Code Branch : $BRANCH”

echo

if [[ -n $DELETE && $DELETE == ‘delete’ ]]; then

realpause “Ready to delete $PROJECT?”

source ./godelete.sh

godelete

exit

fi

realpause “Ready to build ???”

if [ -d $DIR ]; then

echo “dir ‘$DIR’ exists … exiting”

exit

else

echo “Creating dir $DIR …”

mkdir -p $DIR

echo “cd $DIR …”

cd $DIR

fi

createccrepo=$( aws codecommit create-repository –repository-name $PROJECT –region $REGION 2>/dev/null )

repossh=$(echo $createccrepo | jq -r ‘.repositoryMetadata.cloneUrlSsh’ 2>/dev/null )

repohttp=$(echo $createccrepo | jq -r ‘.repositoryMetadata.cloneUrlHttp’ 2>/dev/null )

if [ -z “$repossh” ]; then

listccrepo=$( aws codecommit get-repository –repository-name $PROJECT –region $REGION )

repossh=$(echo $listccrepo | jq -r ‘.repositoryMetadata.cloneUrlSsh’ )

repohttp=$(echo $listccrepo | jq -r ‘.repositoryMetadata.cloneUrlHttp’ )

fi

echo “Code Commit Repo SSH: $repossh”

echo “Code Commit Repo HTT: $repohttp”

pause “Create Load Balancer …”

load_balancer=$( aws elbv2 create-load-balancer –name $PROJECT-lb –subnets $SUBNETS –security-groups $SECURITY_GROUPS –region $REGION)

dns=$(echo $load_balancer | jq -r ‘.LoadBalancers[] | “\(.DNSName)”‘)

lbarn=$(echo $load_balancer | jq -r ‘.LoadBalancers[] | “\(.LoadBalancerArn)”‘)

echo “LB DNS: $dns”

echo “LB ARN: $lbarn”

pause “Create Target Group 8080 (test) …”

target_group_test=$( aws elbv2 create-target-group –name $PROJECT-target-test –protocol HTTP –port 8080 –target-type ip –vpc-id $VPC –region $REGION)

tgarn_test=$(echo $target_group_test | jq -r ‘.TargetGroups[] | “\(.TargetGroupArn)”‘)

pause “Creat Target Group 443 (live) …”

target_group_live=$( aws elbv2 create-target-group –name $PROJECT-target-live –protocol HTTP –port 443 –target-type ip –vpc-id $VPC –region $REGION)

tgarn_live=$(echo $target_group_live | jq -r ‘.TargetGroups[] | “\(.TargetGroupArn)”‘)

echo “TARGET ARN test: $tgarn_test”

echo “TARGET ARN live: $tgarn_live”

pause “Create LB Listner 80 …”

listener80=$( aws elbv2 create-listener –load-balancer-arn “$lbarn” –protocol HTTP –port 80 –default-actions “Type=redirect,RedirectConfig={Protocol=HTTPS,Port=443,Host=’#{host}’,Query=’#{query}’,Path=’/#{path}’,StatusCode=HTTP_301}” –region $REGION)

pause “Create LB Listner 443 …”

listner443=$( aws elbv2 create-listener –load-balancer-arn “$lbarn” –protocol HTTPS –port 443 –certificates CertificateArn=”$CERTIFICATE_ARN” –ssl-policy “ELBSecurityPolicy-2016-08” –default-actions “Type=forward,TargetGroupArn=$tgarn_live” –region $REGION)

lstarn443=$(echo $listner443 | jq -r ‘.Listeners[] | “\(.ListenerArn)”‘)

echo $lstarn443

pause “Create Task Definition …”

OLDIFS=$IFS

IFS=”

FARGATE_TASK='{

“memory”: “4096”,

“networkMode”: “awsvpc”,

“family”: “‘$PROJECT’-task”,

“cpu”: “2048”,

“executionRoleArn”: “arn:aws:iam::’$ACCOUNT_ID’:role/ecsTaskExecutionRole”,

“requiresCompatibilities”: [

“FARGATE”

],

“taskRoleArn”: “arn:aws:iam::’$ACCOUNT_ID’:role/ecsTaskExecutionRole”,

“containerDefinitions”: [

{

“memoryReservation”: 500,

“name”: “‘$PROJECT’-container”,

“image”: “”,

“essential”: true,

“portMappings”: [

{

“protocol”: “tcp”,

“containerPort”: 80,

“hostPort”: 80

}

]

}

]

}’

echo $FARGATE_TASK | sed “s||$IMAGE_ARN:latest|g” > taskdef.json

IFS=$OLDIFS

pause “Register ECS Task …”

task=$( aws ecs register-task-definition –cli-input-json file://taskdef.json –region $REGION)

pause “Create Service Definition service_def.json file …”

OLDIFS=$IFS

IFS=”

SERVICE_DEF='{

“cluster”: “‘$CLUSTER_NAME'”,

“serviceName”: “‘$PROJECT’-service”,

“taskDefinition”: “‘$PROJECT’-task”,

“loadBalancers”: [

{

“targetGroupArn”: “‘$tgarn_live'”,

“containerName”: “‘$PROJECT’-container”,

“containerPort”: 80

}

],

“launchType”: “FARGATE”,

“schedulingStrategy”: “REPLICA”,

“deploymentController”: {

“type”: “ECS”

},

“platformVersion”: “LATEST”,

“networkConfiguration”: {

“awsvpcConfiguration”: {

“assignPublicIp”: “ENABLED”,

“securityGroups”: [ “‘$SECURITY_GROUPS'” ],

“subnets”: [ “‘$SUBNETS'” ]

}

},

“desiredCount”: 1

}’

echo $SERVICE_DEF > $PROJECT-service_def.json

IFS=$OLDIFS

# Create Service

pause “Create ECS Service …”

service=$( aws ecs create-service –cli-input-json file://$PROJECT-service_def.json –region $REGION)

servarn=$(echo $service | jq -r ‘.service.serviceArn’)

# buildspec.yml is used by codebuild to build the service

pause “Create buildspec.yml file …”

OLDIFS=$IFS

IFS=”

BUILD_SPEC=”

version: 0.2

phases:

install:

runtime-versions:

docker: 18

pre_build:

commands:

– echo Logging in to Amazon ECR…

– aws –version

– \$(aws ecr get-login –region \$AWS_DEFAULT_REGION –no-include-email)

– REPOSITORY_URI=$ACCOUNT_ID.dkr.ecr.$REGION.amazonaws.com/$PROJECT

– COMMIT_HASH=\$(echo \$CODEBUILD_RESOLVED_SOURCE_VERSION | cut -c 1-7)

– IMAGE_TAG=\${COMMIT_HASH:=latest}

build:

commands:

– echo Build started on \`date\`

– echo Building the Docker image…

– docker build -t \$REPOSITORY_URI .

– docker tag \$REPOSITORY_URI \$REPOSITORY_URI:latest

post_build:

commands:

– echo Build completed on \`date\`

– echo Pushing the Docker images…

– docker push \$REPOSITORY_URI:latest

– echo Writing image definitions file…

– printf ‘[{\”name\”:\”$PROJECT-container\”,\”imageUri\”:\”%s\”}]’ \$REPOSITORY_URI:latest > imagedefinitions.json

artifacts:

files:

– imagedefinitions.json

“

echo $BUILD_SPEC > buildspec.yml

# appspec.yaml is used in codedeploy service

pause “Create appspec.yaml file …”

APP_SPEC=’

version: 0.0

Resources:

– TargetService:

Type: AWS::ECS::Service

Properties:

TaskDefinition:

LoadBalancerInfo:

ContainerName: “‘$PROJECT’-container”

ContainerPort: 80

PlatformVersion: “LATEST”

#Hooks:

#- BeforeInstall: “BeforeInstallHookFunctionName”

#- AfterInstall: “AfterInstallHookFunctionName”

#- AfterAllowTestTraffic: “AfterAllowTestTrafficHookFunctionName”

#- BeforeAllowTraffic: “BeforeAllowTrafficHookFunctionName”

#- AfterAllowTraffic: “AfterAllowTrafficHookFunctionName”

‘

echo $APP_SPEC > appspec.yaml

pause “Create Docker file …”

DOCKERFILE=’

FROM nginx:alpine

#RUN echo “Hello from Kirk! “$(date)”” > /usr/share/nginx/html/index.html

COPY . /usr/share/nginx/html

‘

echo $DOCKERFILE > Dockerfile

IFS=$OLDIFS

pause “Create Code build role and permissions …”

OLDIFS=$IFS

IFS=”

CODE_BUILD_SERVICE_ROLE='{

“Version”: “2012-10-17”,

“Statement”: [

{

“Action”: “sts:AssumeRole”,

“Effect”: “Allow”,

“Principal”: {

“Service”: “codebuild.amazonaws.com”

}

}

]

}

‘

echo $CODE_BUILD_SERVICE_ROLE > $PROJECT-codebuild-service-role.json

IFS=$OLDIFS

update_code_build_policy='{

“Version”: “2012-10-17”,

“Statement”: [

{

“Effect”: “Allow”,

“Action”: [

“ecr:GetAuthorizationToken”,

“ecr:BatchCheckLayerAvailability”,

“ecr:GetDownloadUrlForLayer”,

“ecr:GetRepositoryPolicy”,

“ecr:DescribeRepositories”,

“ecr:ListImages”,

“ecr:DescribeImages”,

“ecr:BatchGetImage”,

“ecr:GetLifecyclePolicy”,

“ecr:GetLifecyclePolicyPreview”,

“ecr:ListTagsForResource”,

“ecr:DescribeImageScanFindings”,

“ecr:InitiateLayerUpload”,

“ecr:UploadLayerPart”,

“ecr:CompleteLayerUpload”,

“ecr:PutImage”

],

“Resource”: “*”

},

{

“Effect”: “Allow”,

“Resource”: [

“*”

],

“Action”: [

“logs:CreateLogGroup”,

“logs:CreateLogStream”,

“logs:PutLogEvents”

]

},

{

“Effect”: “Allow”,

“Resource”: [

“arn:aws:s3:::*”

],

“Action”: [

“s3:PutObject”,

“s3:GetObject”,

“s3:GetObjectVersion”,

“s3:GetBucketAcl”,

“s3:GetBucketLocation”

]

}

]

}’

echo $update_code_build_policy > $PROJECT-update_code_build_policy.json

IFS=$OLDIFS

create_codebuild_role=$( aws iam create-role –role-name $PROJECT-codebuild-role –assume-role-policy-document file://$PROJECT-codebuild-service-role.json )

update_codebuild_role=$( aws iam put-role-policy –role-name $PROJECT-codebuild-role –policy-name $PROJECT-codebuild-policy –policy-document file://$PROJECT-update_code_build_policy.json )

for i in {1..10}; do sleep 1; echo -n “.”; done

echo

pause “Create Code Build project …”

code_build_project=$(aws codebuild create-project \

–name “$PROJECT” \

–source “{\”type\”: \”CODECOMMIT\”,\”location\”:\”$repohttp\”}” \

–artifacts {“\”type\”: \”NO_ARTIFACTS\””} \

–environment “{\”privilegedMode\”: true,\”type\”: \”LINUX_CONTAINER\”,\”image\”: \”aws/codebuild/amazonlinux2-x86_64-standard:2.0\”,\”computeType\”: \”BUILD_GENERAL1_SMALL\”,\”imagePullCredentialsType\”: \”CODEBUILD\”}” \

–service-role “arn:aws:iam::$ACCOUNT_ID:role/$PROJECT-codebuild-role”)

pause “Create Code pipeline dependencies ( role and permissions ) …”

# create the aws codepipeline

OLDIFS=$IFS

IFS=”

# first create the role

CODEPIPEPOLICYROLE='{

“Version”: “2012-10-17”,

“Statement”: [

{

“Action”: “sts:AssumeRole”,

“Effect”: “Allow”,

“Principal”: {

“Service”: “codepipeline.amazonaws.com”

}

}

]

}

‘

echo $CODEPIPEPOLICYROLE > $PROJECT-code-pipe-policy-role.json

IFS=$OLDIFS

createcodepipepolicy=$( aws iam create-role –role-name $PROJECT-code-pipe-policy-role –assume-role-policy-document file://$PROJECT-code-pipe-policy-role.json )

# then update the policy with the appropriate permissions ( below results in a template that currently works for an existing bg pipe )

OLDIFS=$IFS

IFS=”

UPDATECODEPIPEPOLICY='{

“Version”: “2012-10-17”,

“Statement”: [

{

“Action”: [

“iam:PassRole”

],

“Resource”: “*”,

“Effect”: “Allow”,

“Condition”: {

“StringEqualsIfExists”: {

“iam:PassedToService”: [

“cloudformation.amazonaws.com”,

“elasticbeanstalk.amazonaws.com”,

“ec2.amazonaws.com”,

“ecs-tasks.amazonaws.com”

]

}

}

},

{

“Action”: [

“codecommit:CancelUploadArchive”,

“codecommit:GetBranch”,

“codecommit:GetCommit”,

“codecommit:GetUploadArchiveStatus”,

“codecommit:UploadArchive”

],

“Resource”: “*”,

“Effect”: “Allow”

},

{

“Action”: [

“codedeploy:CreateDeployment”,

“codedeploy:GetApplication”,

“codedeploy:GetApplicationRevision”,

“codedeploy:GetDeployment”,

“codedeploy:GetDeploymentConfig”,

“codedeploy:RegisterApplicationRevision”

],

“Resource”: “*”,

“Effect”: “Allow”

},

{

“Action”: [

“elasticbeanstalk:*”,

“ec2:*”,

“elasticloadbalancing:*”,

“autoscaling:*”,

“cloudwatch:*”,

“s3:*”,

“sns:*”,

“cloudformation:*”,

“rds:*”,

“sqs:*”,

“ecs:*”

],

“Resource”: “*”,

“Effect”: “Allow”

},

{

“Action”: [

“lambda:InvokeFunction”,

“lambda:ListFunctions”

],

“Resource”: “*”,

“Effect”: “Allow”

},

{

“Action”: [

“opsworks:CreateDeployment”,

“opsworks:DescribeApps”,

“opsworks:DescribeCommands”,

“opsworks:DescribeDeployments”,

“opsworks:DescribeInstances”,

“opsworks:DescribeStacks”,

“opsworks:UpdateApp”,

“opsworks:UpdateStack”

],

“Resource”: “*”,

“Effect”: “Allow”

},

{

“Action”: [

“cloudformation:CreateStack”,

“cloudformation:DeleteStack”,

“cloudformation:DescribeStacks”,

“cloudformation:UpdateStack”,

“cloudformation:CreateChangeSet”,

“cloudformation:DeleteChangeSet”,

“cloudformation:DescribeChangeSet”,

“cloudformation:ExecuteChangeSet”,

“cloudformation:SetStackPolicy”,

“cloudformation:ValidateTemplate”

],

“Resource”: “*”,

“Effect”: “Allow”

},

{

“Action”: [

“codebuild:BatchGetBuilds”,

“codebuild:StartBuild”

],

“Resource”: “*”,

“Effect”: “Allow”

},

{

“Action”: [

“devicefarm:ListProjects”,

“devicefarm:ListDevicePools”,

“devicefarm:GetRun”,

“devicefarm:GetUpload”,

“devicefarm:CreateUpload”,

“devicefarm:ScheduleRun”

],

“Resource”: “*”,

“Effect”: “Allow”

},

{

“Action”: [

“servicecatalog:ListProvisioningArtifacts”,

“servicecatalog:CreateProvisioningArtifact”,

“servicecatalog:DescribeProvisioningArtifact”,

“servicecatalog:DeleteProvisioningArtifact”,

“servicecatalog:UpdateProduct”

],

“Resource”: “*”,

“Effect”: “Allow”

},

{

“Action”: [

“cloudformation:ValidateTemplate”

],

“Resource”: “*”,

“Effect”: “Allow”

},

{

“Action”: [

“ecr:DescribeImages”

],

“Resource”: “*”,

“Effect”: “Allow”

}

]

}

‘

echo $UPDATECODEPIPEPOLICY > $PROJECT-code-pipe-policy-update.json

IFS=$OLDIFS

updatecodepipepolicy=$( aws iam put-role-policy –role-name $PROJECT-code-pipe-policy-role –policy-name $PROJECT-code-pipe-policy –policy-document file://$PROJECT-code-pipe-policy-update.json )

for i in {1..10}; do sleep 1; echo -n “.”; done

echo

# Now create the pipeline with the above role

OLDIFS=$IFS

IFS=”

CODE_PIPELINE='{

“pipeline”: {

“name”: “‘$PROJECT’-pipeline”,

“roleArn”: “arn:aws:iam::’$ACCOUNT_ID’:role/’$PROJECT’-code-pipe-policy-role”,

“artifactStore”: {

“type”: “S3”,

“location”: “‘$ARTIFACT_LOCATION'”

},

“stages”: [

{

“name”: “Source”,

“actions”: [

{

“inputArtifacts”: [],

“name”: “Source”,

“region”: “‘$REGION'”,

“actionTypeId”: {

“category”: “Source”,

“owner”: “AWS”,

“version”: “1”,

“provider”: “CodeCommit”

},

“outputArtifacts”: [

{

“name”: “SourceArtifact”

}

],

“configuration”: {

“PollForSourceChanges”: “false”,

“BranchName”: “‘$BRANCH'”,

“RepositoryName”: “‘$PROJECT'”

},

“runOrder”: 1

}

]

},

{

“name”: “Build”,

“actions”: [

{

“inputArtifacts”: [

{

“name”: “SourceArtifact”

}

],

“name”: “Build”,

“region”: “‘$REGION'”,

“actionTypeId”: {

“category”: “Build”,

“owner”: “AWS”,

“version”: “1”,

“provider”: “CodeBuild”

},

“outputArtifacts”: [

{

“name”: “BuildArtifact”

}

],

“configuration”: {

“ProjectName”: “‘$PROJECT'”

},

“runOrder”: 1

}

]

},

{

“name”: “Deploy”,

“actions”: [

{

“inputArtifacts”: [

{

“name”: “BuildArtifact”

}

],

“name”: “Deploy”,

“region”: “‘$REGION'”,

“actionTypeId”: {

“category”: “Deploy”,

“owner”: “AWS”,

“version”: “1”,

“provider”: “ECS”

},

“outputArtifacts”: [],

“configuration”: {

“ClusterName”: “‘$CLUSTER_NAME'”,

“ServiceName”: “‘$PROJECT’-service”

},

“runOrder”: 1

}

]

}

],

“version”: 1

}

}

‘

echo $CODE_PIPELINE > $PROJECT-codepipe.json

IFS=$OLDIFS

pause “Create code pipeline …”

create_code_pipeline=$( aws codepipeline create-pipeline –cli-input-json file://$PROJECT-codepipe.json –region $REGION )

pause “Create ECR repository …”

createecrrepo=$( aws ecr create-repository –repository-name $PROJECT –region $REGION 2>/dev/null )

ecrrepouri=$(echo $createecrrepo | jq -r ‘.repository.repositoryUri’ 2>/dev/null )

if [ -z “$ecrrepouri” ]; then

listecrrepo=$( aws ecr describe-repositories –repository-name $PROJECT –region $REGION )

ecrrepouri=$(echo $listecrrepo | jq -r ‘.repositories[].repositoryUri’ 2>/dev/null )

fi

echo “ECR Repo URI: $ecrrepouri”

pause “Create codecommit trigger using cloudwatch event and rule …”

OLDIFS=$IFS

IFS=”

cloudwatch_rule='{

“source”: [

“aws.codecommit”

],

“detail-type”: [

“CodeCommit Repository State Change”

],

“resources”: [

“arn:aws:codecommit:’$REGION’:’$ACCOUNT_ID’:’$PROJECT'”

],

“detail”: {

“event”: [

“referenceCreated”,

“referenceUpdated”

],

“referenceType”: [

“branch”

],

“referenceName”: [

“‘$BRANCH'”

]

}

}”

‘

cloudwatch_role_trust_policy='{

“Version”: “2012-10-17”,

“Statement”: [

{

“Action”: “sts:AssumeRole”,

“Effect”: “Allow”,

“Principal”: {

“Service”: “events.amazonaws.com”

}

}

]

}

‘

echo $cloudwatch_role_trust_policy > $PROJECT-cloudwatch_role_trust_policy.json

IFS=$OLDIFS

pause “Create cloudwatch role …”

cloudwatch_role=$( aws iam create-role –role-name $PROJECT-cloudwatch-role –assume-role-policy-document file://$PROJECT-cloudwatch_role_trust_policy.json )

OLDIFS=$IFS

IFS=”

update_cloudwatch_role_policy='{

“Version”: “2012-10-17”,

“Statement”: [

{

“Effect”: “Allow”,

“Action”: [

“codepipeline:StartPipelineExecution”

],

“Resource”: [

“arn:aws:codepipeline:’$REGION’:’$ACCOUNT_ID’:’$PROJECT’-pipeline”

]

}

]

}

‘

echo $update_cloudwatch_role_policy > $PROJECT-update_cloudwatch_role_policy.json

IFS=$OLDIFS

pause “Attach policy to cloudwatch role …”

update_cloudwatch_policy=$( aws iam put-role-policy –role-name $PROJECT-cloudwatch-role –policy-name $PROJECT-cloudwatch-policy –policy-document file://$PROJECT-update_cloudwatch_role_policy.json )

for i in {1..10}; do sleep 1; echo -n “.”; done

echo

pause “Create rule for cloudwatch/codecommit trigger …”

cloudwatch_rule=$( aws events put-rule –name “codepipeline-$PROJECT-$BRANCH-rule” –event-pattern “$(echo $cloudwatch_rule)” )

pause “Create target for cloudwatch/codecommit trigger …”

cloudwatch_target=$( aws events put-targets –rule “codepipeline-$PROJECT-$BRANCH-rule” –targets “Id”=”codepipeline-$PROJECT-pipeline”,”Arn”=”arn:aws:codepipeline:$REGION:$ACCOUNT_ID:$PROJECT-pipeline”,”RoleArn”=”arn:aws:iam::$ACCOUNT_ID:role/$PROJECT-cloudwatch-role” )

pause “Move the current dir/code to tmp, clone repo, cp tmp dir contents to new git repo dir …”

cd ..

mv $PROJECT $PROJECT-tmp

git clone $repossh

cp -r $PROJECT-tmp/* $PROJECT/

cd $PROJECT

ls -ltra

pause “Push initial image to code repository? ecr repouri: $ecrrepouri”

dockerpull=$( docker pull nginx )

dockertag=$( docker tag nginx:latest $ecrrepouri:latest )

$( aws ecr get-login –no-include-email –region $REGION )

dockerpush=$( docker push $ecrrepouri:latest )

pause “Update git repo and push to branch: $BRANCH …”

git add .

git commit -m”deploy_`date +”%Y-%m-%d %H:%M”`”

git push

echo

echo

echo “~~~~~~~ Done !!!!! ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~”

echo “Project URL: $dns”

echo ” git repo: ‘git clone $repossh'”

echo “~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~”

echo

The above script has several sections that play some tricks with variables, creating temp files, using single and double quotes. So the best way to use this program is to download it here:

So go ahead and extract it, change the variables to match your environment, chmod to 700 ( or whatever you think is appropriate to run in your env ) and let me know how it goes. When you first run the program, it is designed to pause and display your settings like this:

$> ./simple_cicd_pipeline MyNewCICD-PipelineVPC : vpc-<DEFAULT_VPC>Subnets : <LIST_OF_SUBNETS>Security Groups : <SECURITY_GROUPS>Region : <REGION>Cert : arn:aws:acm:$REGION:<AWS_ACCOUNT_ID>:certificate/<CERT_ID>Cluster ARN : arn:aws:ecs:$REGION:<AWS_ACCOUNT_ID>:cluster/<YOUR_FARGATE_CLUSTER>Image ARN : <AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com/MyNewCICD-PipelineArtifact Location: s3://<S3_BUCKET>Code Branch : <GIT_BRANCH>

Ready to build ???, press any key to continue, cntl+c to exit ...

So fill in the variables to match your local environment and then launch it to create your complete end-tp-end CICD pipeline. and please feel free to comment and let me know how it goes. I will be making adjustments to further automate the process, along with the cleanup script to remove the project when you finished testing.

Good luck!